I was recently given the task of improving the accessibility of a statistical report, with the goal of meeting level AA of the Web Content Accessibility Guidelines 2.0. The report contains about 600 pages of tables and graphs in a DOC file. The rule of thumb for tables is to ensure that a visually impaired person can use the tab key to navigate easily across cells in a row from the top left of the table to the bottom right. Additionally, tables and graphs should contain alternative titles and descriptions.

Last year, I produced the report with the ![]() packages xtable and R2HTML. James Falkofske notes that hypertext markup language (HTML) files are inherently fluid, allowing readers with a wide range of abilities and assistive technologies to access the content. HTML tables with little or no nesting generally meet the navigation guidelines, even when opened with Word and saved as a word processor document. However, alternative titles and descriptions are not applied to tables and graphs automatically.

packages xtable and R2HTML. James Falkofske notes that hypertext markup language (HTML) files are inherently fluid, allowing readers with a wide range of abilities and assistive technologies to access the content. HTML tables with little or no nesting generally meet the navigation guidelines, even when opened with Word and saved as a word processor document. However, alternative titles and descriptions are not applied to tables and graphs automatically.

It would be very time consuming to retroactively add alternative text to 600 pages of tables and graphs in Word, so I decided to look for ways to modify the defaults in the xtable and R2HTML packages. The example below shows that it’s possible to add alternative text while writing tables and graphs to a HTML file. For tables, the goal is to add title and summary attributes to the <TABLE> tag. Those attributes will show up as alternative text when the HTML file is opened in Word. For graphs, the goal is to add title and “alt” attributes to the <IMG> tag. The graph’s “alt” attribute will carry over to the alternative description field when the HTML file is opened in Word, but the title won’t. However, adding title attributes will allow web browsers to display a float-over title for graphs as well as tables. Try it out by floating your cursor over the table and graph below.

########################################

#Load libraries.

library(xtable) #for tables

library(R2HTML) #for graphs

library(datasets) #for data

########################################

#Initiate a HTML file to export results.

#The file can be named with a DOC extension to facilitate opening it with Word.

name.file.htm <- "Accessible Tables and Graphs.doc"

HTML.title(

"Add alternative titles and descriptions to tables and graphs in a HTML-based DOC file",

file = name.file.htm, HR = 1, append = F)

########################################

#Accessible table example

#Assign alternative title and description to a single object.

html.table.attributes <- paste('border=1',

'title="Table of days of frost and years of life expectancy"',

'summary="Table of days of frost and years of life expectancy"')

#The xtable(caption) argument places the title in the first row of the table, making it

#more difficult to designate table headers to repeat across multiple pages in a document.

#Produce the table title with HTML.title() instead.

HTML.title("Days of frost and years of life expectancy", file = name.file.htm, HR = 3)

#Create table and write to file.

data.frost.exp <- as.data.frame(state.x77[, c("Frost", "Life Exp")])

names(data.frost.exp)[2] <- "Life"

table.xtable <- xtable(data.frost.exp, digits = c(NA, 0, 2))

print(table.xtable, type = "html", html.table.attributes = html.table.attributes,

file = name.file.htm, border = 1, append = T)

HTML("<br>", file = name.file.htm)

########################################

#Accessible graph example

#Modify HTMLInsertGraph() by adding Title and Alt arguments.

HTMLInsertGraph <- function (GraphFileName = "", Caption = "",

Title = "untitled graph", Alt = "a graph",

GraphBorder = 1, Align = "center",

WidthHTML = 500, HeightHTML = NULL,

file = get(".HTML.file"), append = TRUE, ...) {

cat("n", file = file, append = append, ...)

cat(paste("<p align=", Align, "><img src='", GraphFileName,

"' title='", Title, "' alt='", Alt, "' border=", GraphBorder,

if (!is.null(WidthHTML))

paste(" width=", WidthHTML, sep = "")

else "",

if (!is.null(HeightHTML))

paste(" height=", HeightHTML, sep = "")

else "", ">", sep = "", collapse = ""),

file = file, append = TRUE, sep = "")

if (Caption != "")

cat(paste("<br><i class=caption>", Caption, "</i>"),

file = file, append = TRUE, sep = "")

invisible(return(TRUE))

}

#Assign alternative title and description to objects.

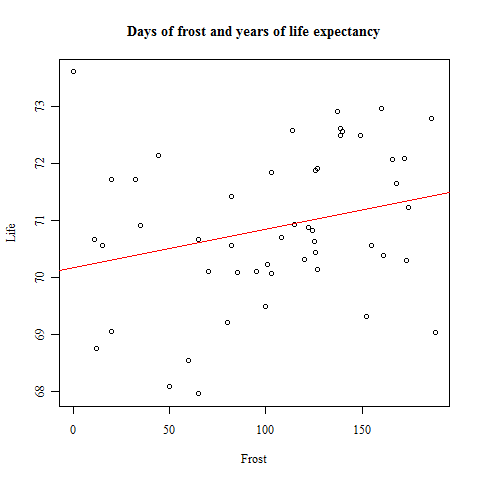

Title <- "Graph of days of frost and years of life expectancy"

Alt <- "Graph of days of frost and years of life expectancy"

name.file.plot <- "Days of frost and years of life expectancy.png"

png(name.file.plot, width = 350, height = 350)

plot(data.frost.exp, main = "Days of frost and years of life expectancy", family = "serif")

abline(lm(Life ~ Frost, data.frost.exp), col = "red")

dev.off()

HTMLInsertGraph(GraphFileName = name.file.plot, file = name.file.htm, Align = "left",

WidthHTML = 350, HeightHTML = 350, append = T, Title = Title, Alt = Alt)

HTML("<br>", file = name.file.htm)

Days of frost and years of life expectancy

| Frost | Life | |

|---|---|---|

| Alabama | 20 | 69.05 |

| Alaska | 152 | 69.31 |

| Arizona | 15 | 70.55 |

| Arkansas | 65 | 70.66 |

| California | 20 | 71.71 |

| Colorado | 166 | 72.06 |

| Connecticut | 139 | 72.48 |

| Delaware | 103 | 70.06 |

| Florida | 11 | 70.66 |

| Georgia | 60 | 68.54 |

| Hawaii | 0 | 73.60 |

| Idaho | 126 | 71.87 |

| Illinois | 127 | 70.14 |

| Indiana | 122 | 70.88 |

| Iowa | 140 | 72.56 |

| Kansas | 114 | 72.58 |

| Kentucky | 95 | 70.10 |

| Louisiana | 12 | 68.76 |

| Maine | 161 | 70.39 |

| Maryland | 101 | 70.22 |

| Massachusetts | 103 | 71.83 |

| Michigan | 125 | 70.63 |

| Minnesota | 160 | 72.96 |

| Mississippi | 50 | 68.09 |

| Missouri | 108 | 70.69 |

| Montana | 155 | 70.56 |

| Nebraska | 139 | 72.60 |

| Nevada | 188 | 69.03 |

| New Hampshire | 174 | 71.23 |

| New Jersey | 115 | 70.93 |

| New Mexico | 120 | 70.32 |

| New York | 82 | 70.55 |

| North Carolina | 80 | 69.21 |

| North Dakota | 186 | 72.78 |

| Ohio | 124 | 70.82 |

| Oklahoma | 82 | 71.42 |

| Oregon | 44 | 72.13 |

| Pennsylvania | 126 | 70.43 |

| Rhode Island | 127 | 71.90 |

| South Carolina | 65 | 67.96 |

| South Dakota | 172 | 72.08 |

| Tennessee | 70 | 70.11 |

| Texas | 35 | 70.90 |

| Utah | 137 | 72.90 |

| Vermont | 168 | 71.64 |

| Virginia | 85 | 70.08 |

| Washington | 32 | 71.72 |

| West Virginia | 100 | 69.48 |

| Wisconsin | 149 | 72.48 |

| Wyoming | 173 | 70.29 |